Helpful Bash Script - git reset all

22 Sep 2021

I use this script at work and at home. It saves me a lot of time when I need all of my local repositories to be up to date with the master branch.

It’s a simple script - it iterates through a folder (in my case, ~/Documents) and checks each directory to see if it’s a valid git project. If so, it checks out master and fetches the latest commits. Optionally, you can also have the script delete all branches other than master. You can also (again, optionally) run yarn install in any directories with a yarn.lock file.

If you go on vacation for a week and come back to all of your repositories out-of-date, this script is a lifesaver.

#!/bin/sh

IGNORED_DIRECTORY="personal-scripts"

# Ensure we're in the correct directory (update this directory to your preference)

cd ~/Documents

# Loop through each sub-directory and perform some work on them

for f in *; do

if [ -d "$f" ]; then

# Ignore this directory when making changes

if [ "$f" == $IGNORED_DIRECTORY ]; then

echo "Ignoring $IGNORED_DIRECTORY"

continue 1

fi

cd "$f" # $f is a directory, so we can cd into it

if [ -d .git ]; then

# This directory has a .git directory :)

git reset --hard

git clean -d -f

git checkout master

git pull

### Warning: This will delete ALL local branches aside from master!

# Only uncomment if you really, really want this!

# git branch | grep -v "master" | xargs git branch -D

### Warning: This installs all yarn packages in all directories

# Very slow, but very useful!

# if [ -f yarn.lock ]; then

# echo "yarn.lock exists, installing packages."

# yarn install

# fi

else

echo "/${f}/ does not have a .git directory."

fi

cd ..

fi

done

Get a Pull Request by Number - Bash Function

22 Sep 2021

If you’re working on an open-source project, you’ll find yourself frequently pulling down other people’s PRs. Here’s an easy command to add to your .bash_profile that lets you easily “git” (hehe) a PR by just providing the PR number.

# Add this to your .bash_profile

# Just pass a number (e.g. 451) and the branch will be pulled down for you

# Great for working with lots of forks

gitpr () {

git fetch origin pull/$1/head:pr/$1 && git checkout pr/$1

}

Usage:

# Call `gitpr` with the number of the PR you're trying to pull down

gitpr 451

# That's it! You'll be switched to branch 'pr/451' and ready to review!

Creating Effective Presentations with stock Apple applications

21 Sep 2021

When you don’t have access to advanced editing software (i.e. on work devices), sometimes you’re going to need to abuse the stock applications installed on your Mac.

I like to use:

- iMovie

- Quicktime Player

- Keynote

For creating images for animations, I like to use SimpleDiagrams4, which is free for a 7 day trial and then 50 bucks. Super worth it IMO.

Creating Diagrams

As mentioned, I use SimpleDiagrams4. I make sure I download all of the free libraries on their site so I have a large range of shapes and icons to work with.

I make the Document size 4000x4000px. This lets me zoom around the diagram later without ugly aliasing effects when I zoom in.

SimpleDiagrams4 does not support animation, but you can create different frames and export them. We’ll animate them in Keynote later.

When you’re happy, export your diagrams as a PNG file, and uncheck the Export background option - we want a transparent background.

Animating Diagrams

This part takes a lot of finesse. Import your SimpleDiagrams PNG file into a blank slide in Keynote.

There are two ways to animate slides.

Method 1 contains all of the animation logic in one slide, and it’s great for small animations, but can get REALLY confusing when you move around more than 5-6 times.

Method 2 will create a bunch of slides, and is not as customizable as Method 1 - but I recommend using this method until you know for sure you need Method 1.

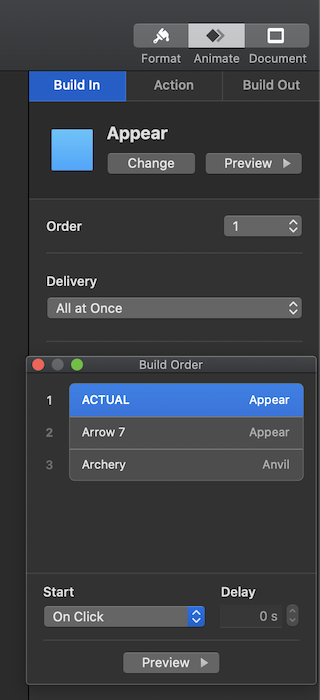

Method 1 involves using Keynote’s built-in animation system. This will involve a mixture of Build In, Action, and Build Out animations. These are governed by the Build Order. You can use the Build Order to make really fine-grained animations with specific delays and start/stop points.

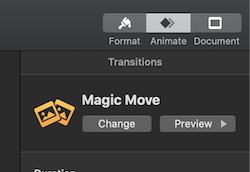

Method 2 involves abusing Keynote’s built-in Magic Move between-slide transition. This amazing transition detects similar objects (or words or characters) and tweens them across distinctly different slides. This workflow involves creating one slide for each tween of animation. It’s not very configurable, but it works really well for scooting around a large image with smooth movement.

I prefer to use Method 2 unless I have to animate multiple things at once.

This part is more art than science, so be prepared to work hard on it.

Exporting your animated diagram

- In Keynote, Play -> Record Slideshow

- Record yourself animating the slides

File->Export To->Movie

Recording your screen

- Open Quicktime Player

File->New Screen RecordingStart Recording->Stop Recording->Save

Feel free to narrate your actions or don’t - we can always add voiceovers later.

You will now have a .mov file with your screen recording.

Editing your screen recording

- Open iMovie

- Import your

.movfile and put it on the timeline - When finished, export your file (see Editing and Exporting Your Presentation below)

Editing and Exporting Your Presentation

- Open iMovie

- Go nuts

- You can re-record voiceovers by hitting

V - When you’re satisfied,

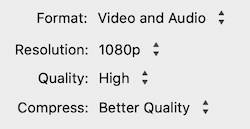

File->Share->File - Use the following settings

Turning a recording into a GIF

Note: make sure you convert .mov files to .mp4 via iMovie before doing this.

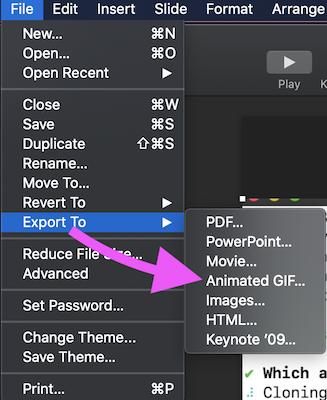

- Open Keynote

- Create a slide that’s just your video file

File->Export to->Animated Gif

Compressing your GIF

Once you have your .gif - use gifsicle to compress it.

https://formulae.brew.sh/formula/gifsicle

# Compress input.gif

gifsicle -i input.gif -O3 --colors 256 -o output.gif

Creating demo GIFs

For creating simple GIFs, I like to use Licecap.

Make sure you save your file name as [file-name].gif (if you don’t include the .gif suffix, it’ll fail on Macs.)

Air Cooling a Buttkicker Gamer 2

27 Feb 2021

Is your Buttkicker Gamer 2 overheating when playing iRacing or other sim racing games? Mine was.

The Buttkicker company is aware of the issue (I sent them an email), but they can only do so much - running high intensity settings while sim racing is going to be hard on the Buttkicker, period.

Hello Davis,

I’m glad you’re enjoying the Gamer 2!

What you’re experiencing is the thermal cutoff switch being engaged in the transducer, which shuts itself then resumes once cooled off.

- Buttkicker Support Team

Before the following modification, I was able to get only about 40 minutes of runtime before the Buttkicker would overheat.

A few beers, some scrap metal, and two USB fans later, my Buttkicker Gamer 2 is now capable of long sessions at high intensity.

Here is a slo-mo video of the results.

The Buttkicker no longer overheats!

This is a 100% necessary addition to the cockpit.

I control the fans (they are daisy chained together) with an on/off switch on the front of the cockpit.

I love it. I feel like a pilot with a pre-flight check-list

“Power fans on. Check. Power amplifier on. Check”

What you’ll need:

- Some scrap metal

- Drill

- Buttkicker Gamer2

- RSeat N1 Buttkicker mount

- A dollar and a dream

- Someone more mechanically gifted than me